In addition to the previous article about deployment tools in Kubernetes, I want to tell you about how you can use Jsonnet to simplify the description of the jobs in your .gitlab-ci.yml

Given

There is a monorepo in which:

- 10 dockerfiles

- 30 described deployments

- 3 environments: devel, stage and prod

Task

Configure a pipeline:

- Building Docker images should be done by adding a git tag with a version number.

- Each deployment operation should be performed when pushing to the environment branch and only if files changed in a specific directory

- Each environment has its own gitlab-runner with a different tag that performs deployment only in this environment.

- Not any application should be deployed in each of the environments. We should describe the pipeline in order to be able to make exceptions.

- Some deployments use git submodule and should be run with the

GIT_SUBMODULE_STRATEGY=normalenvironment variable set.

Describing all this may seem like a real hell, but do not despair, armed with Jsonnet, we can easily do it.

Hi!

Recently, many cool automation tools have been released both for building Docker images and for deploying to Kubernetes. In this regard, I decided to play with the Gitlab a little, study its capabilities and, of course, configure the pipeline.

The source of inspiration for this work was the site kubernetes.io, which is automatically generated from source code.

For each new pullrequest the bot generates a preview version with your changes automatically and provides a link for review.

I tried to build a similar process from scratch, but entirely built on Gitlab CI and free tools that I used to use to deploy applications in Kubernetes. Today, I finally will tell you more about them.

The article will consider such tools as: Hugo, qbec, kaniko, git-crypt and GitLab CI with dynamic environments feature.

Not so long ago, the guys from LINBIT presented their new SDS solution – Linstor. This is a fully free storage based on proven technologies: DRBD, LVM, ZFS. Linstor combines simplicity and well-developed architecture, which allows to achieve stability and quite impressive results.

Today I would like to tell you a little about it and show how easy it can be integrated with OpenNebula using linstor_un – a new driver that I developed specifically for this purpose.

Linstor in combination with OpenNebula will allow you to build a high-performance and reliable cloud, which you can easily deploy on your own infrastructure.

Let me tell you how you can safely store SSH keys on a local machine, for not having a fear that some application can steal or decrypt them.

This article will be especially useful to those who have not found an elegant solution after the paranoia in 2018 and continue storing keys in $HOME/.ssh.

To solve this problem, I suggest you using KeePassXC, which is one of the best password managers, it is using strong encryption algorithms, and also it have an integrated SSH agent.

This allows you to safely store all the keys directly in the password database and automatically add them to the system when it is opened. Once the base is closed, the use of SSH keys will also be impossible

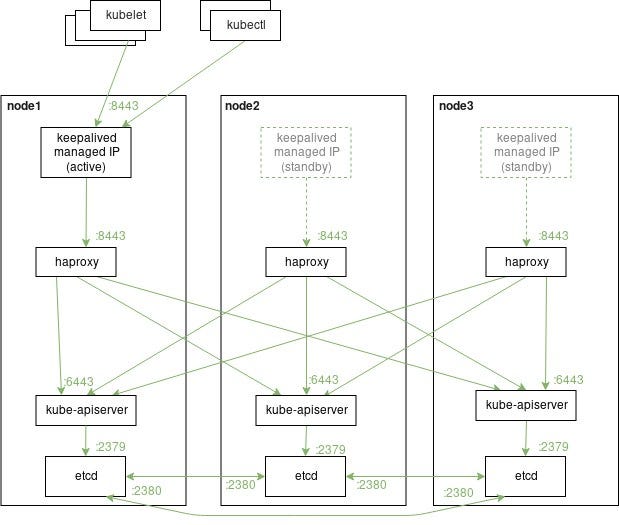

If you want to make this scheme more safe you can add haproxy layer between keepalived and kube-apiserver.

Just install haproxy package into your system, and add the next configuration into /etc/haproxy/haproxy.cfg file